Chinese room

Chinese Room is a thought experiment presented by philosopher John Searle in 1980 to challenge the concept of strong artificial intelligence (AI). Searle's argument focuses on the inability of computers to possess understanding and consciousness, despite their capability to simulate human-like responses. The Chinese Room argument is a significant contribution to the philosophy of mind and the debates surrounding artificial intelligence.

Background[edit | edit source]

The Chinese Room argument was introduced in Searle's paper "Minds, Brains, and Programs," published in the journal Behavioral and Brain Sciences. It was a direct response to the claims of strong AI proponents, who argue that a computer programmed with the right codes and algorithms could not only mimic human intelligence but also understand and have consciousness akin to a human being.

The Argument[edit | edit source]

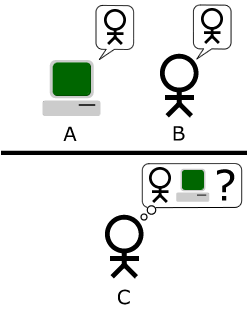

The core of the Chinese Room argument is a thought experiment. Imagine a person who does not understand Chinese is locked in a room full of boxes of Chinese symbols (a database) and a rule book in English for manipulating these symbols (the program). People outside the room send in other Chinese symbols, which, unknown to the person, are questions in Chinese (the input). By following the instructions in the rule book, the person inside the room arranges the symbols and sends back the appropriate responses to the symbols/questions (the output).

To those outside, it appears as if the person in the room understands Chinese, but the person is merely manipulating symbols based on syntactic rules without any understanding of their meaning. Searle argues that, similarly, a computer executing a program is merely manipulating symbols without any understanding or consciousness, challenging the claims of strong AI.

Implications[edit | edit source]

The Chinese Room argument has sparked extensive debate in the fields of artificial intelligence, cognitive science, and the philosophy of mind. Critics of the argument, such as Daniel Dennett and Douglas Hofstadter, have offered various rebuttals, arguing that the system as a whole (the person plus the rule book and symbols) could understand Chinese, or that understanding is not a prerequisite for intelligence.

Conclusion[edit | edit source]

The Chinese Room remains a pivotal argument in discussions about the nature of mind, consciousness, and the potential of artificial intelligence to truly replicate human understanding. It raises fundamental questions about what it means to "understand" and whether or not machines can possess such an attribute.

This article is a philosophy-related stub. You can help WikiMD by expanding it!

Search WikiMD

Ad.Tired of being Overweight? Try W8MD's physician weight loss program.

Semaglutide (Ozempic / Wegovy and Tirzepatide (Mounjaro / Zepbound) available.

Advertise on WikiMD

|

WikiMD's Wellness Encyclopedia |

| Let Food Be Thy Medicine Medicine Thy Food - Hippocrates |

Translate this page: - East Asian

中文,

日本,

한국어,

South Asian

हिन्दी,

தமிழ்,

తెలుగు,

Urdu,

ಕನ್ನಡ,

Southeast Asian

Indonesian,

Vietnamese,

Thai,

မြန်မာဘာသာ,

বাংলা

European

español,

Deutsch,

français,

Greek,

português do Brasil,

polski,

română,

русский,

Nederlands,

norsk,

svenska,

suomi,

Italian

Middle Eastern & African

عربى,

Turkish,

Persian,

Hebrew,

Afrikaans,

isiZulu,

Kiswahili,

Other

Bulgarian,

Hungarian,

Czech,

Swedish,

മലയാളം,

मराठी,

ਪੰਜਾਬੀ,

ગુજરાતી,

Portuguese,

Ukrainian

Medical Disclaimer: WikiMD is not a substitute for professional medical advice. The information on WikiMD is provided as an information resource only, may be incorrect, outdated or misleading, and is not to be used or relied on for any diagnostic or treatment purposes. Please consult your health care provider before making any healthcare decisions or for guidance about a specific medical condition. WikiMD expressly disclaims responsibility, and shall have no liability, for any damages, loss, injury, or liability whatsoever suffered as a result of your reliance on the information contained in this site. By visiting this site you agree to the foregoing terms and conditions, which may from time to time be changed or supplemented by WikiMD. If you do not agree to the foregoing terms and conditions, you should not enter or use this site. See full disclaimer.

Credits:Most images are courtesy of Wikimedia commons, and templates, categories Wikipedia, licensed under CC BY SA or similar.

Contributors: Prab R. Tumpati, MD