Nonlinear dimensionality reduction

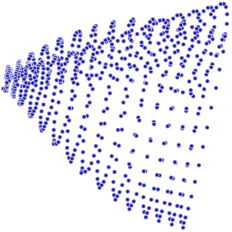

Nonlinear Dimensionality Reduction (NLDR) is a set of techniques in the field of machine learning and statistics used to reduce the dimensions of data while preserving the intrinsic geometry of the original dataset. Unlike linear methods such as Principal Component Analysis (PCA), NLDR methods can handle complex, nonlinear structures in high-dimensional data. These techniques are crucial in areas such as computer vision, bioinformatics, and speech recognition, where the data often reside on a nonlinear manifold within a higher-dimensional space.

Overview[edit | edit source]

The goal of NLDR is to find a low-dimensional representation of high-dimensional data that captures the significant structures or patterns. This process involves understanding the manifold structure of the data, which is based on the manifold hypothesis. The hypothesis suggests that high-dimensional data points tend to lie on a low-dimensional manifold embedded within the high-dimensional space. NLDR techniques aim to uncover this low-dimensional embedding without assuming linearity in the data's structure.

Techniques[edit | edit source]

Several NLDR techniques have been developed, each with its own approach to preserving the data's intrinsic properties. Some of the most notable methods include:

- Isomap: Isomap extends PCA by incorporating geodesic distances among points, which are more reflective of the true distances along the manifold than straight-line distances in the high-dimensional space.

- t-Distributed Stochastic Neighbor Embedding (t-SNE): t-SNE is particularly well-suited for visualizing high-dimensional data in two or three dimensions. It works by converting the distances between data points into probabilities and then minimizing the divergence between these probability distributions in both the high-dimensional and low-dimensional spaces.

- Locally Linear Embedding (LLE): LLE reconstructs high-dimensional data points from their nearest neighbors in a lower-dimensional space, preserving local distances and therefore the manifold's structure.

- Autoencoders: In the context of neural networks, autoencoders learn a compressed, encoded representation of data, which can then be decoded to reconstruct the input. The middle layer of the autoencoder acts as the low-dimensional representation of the data.

Applications[edit | edit source]

NLDR techniques have a wide range of applications across various fields. In bioinformatics, they are used to analyze and visualize genetic and proteomic data. In computer vision, NLDR helps in tasks such as face recognition and image classification by reducing the dimensionality of image data. In natural language processing (NLP), these methods assist in understanding the semantic structures of languages by analyzing high-dimensional word embeddings.

Challenges[edit | edit source]

Despite their advantages, NLDR techniques face several challenges. One of the main issues is the computational complexity involved in processing large datasets. Additionally, choosing the right method and tuning its parameters for a specific dataset can be difficult, as there is no one-size-fits-all solution. The interpretation of the low-dimensional representations can also be challenging, especially in unsupervised learning scenarios where the dimensions do not have a clear, predefined meaning.

Conclusion[edit | edit source]

Nonlinear Dimensionality Reduction provides powerful tools for analyzing and visualizing complex, high-dimensional data. By capturing the essential structures of data, NLDR techniques facilitate the extraction of meaningful insights in various scientific and engineering domains. As data continues to grow in size and complexity, the development and application of NLDR methods will remain a critical area of research in machine learning and statistics.

| Nonlinear dimensionality reduction Resources | |

|---|---|

|

|

Search WikiMD

Ad.Tired of being Overweight? Try W8MD's physician weight loss program.

Semaglutide (Ozempic / Wegovy and Tirzepatide (Mounjaro / Zepbound) available.

Advertise on WikiMD

|

WikiMD's Wellness Encyclopedia |

| Let Food Be Thy Medicine Medicine Thy Food - Hippocrates |

Translate this page: - East Asian

中文,

日本,

한국어,

South Asian

हिन्दी,

தமிழ்,

తెలుగు,

Urdu,

ಕನ್ನಡ,

Southeast Asian

Indonesian,

Vietnamese,

Thai,

မြန်မာဘာသာ,

বাংলা

European

español,

Deutsch,

français,

Greek,

português do Brasil,

polski,

română,

русский,

Nederlands,

norsk,

svenska,

suomi,

Italian

Middle Eastern & African

عربى,

Turkish,

Persian,

Hebrew,

Afrikaans,

isiZulu,

Kiswahili,

Other

Bulgarian,

Hungarian,

Czech,

Swedish,

മലയാളം,

मराठी,

ਪੰਜਾਬੀ,

ગુજરાતી,

Portuguese,

Ukrainian

Medical Disclaimer: WikiMD is not a substitute for professional medical advice. The information on WikiMD is provided as an information resource only, may be incorrect, outdated or misleading, and is not to be used or relied on for any diagnostic or treatment purposes. Please consult your health care provider before making any healthcare decisions or for guidance about a specific medical condition. WikiMD expressly disclaims responsibility, and shall have no liability, for any damages, loss, injury, or liability whatsoever suffered as a result of your reliance on the information contained in this site. By visiting this site you agree to the foregoing terms and conditions, which may from time to time be changed or supplemented by WikiMD. If you do not agree to the foregoing terms and conditions, you should not enter or use this site. See full disclaimer.

Credits:Most images are courtesy of Wikimedia commons, and templates Wikipedia, licensed under CC BY SA or similar.

Contributors: Prab R. Tumpati, MD