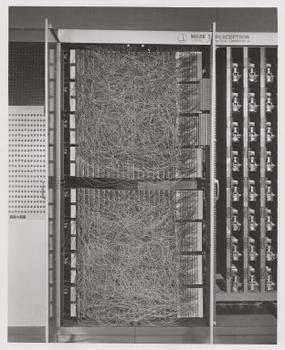

Perceptron

Perceptron is a type of artificial neural network invented in 1957 by Frank Rosenblatt. It is a form of linear classifier, meaning that it makes its predictions based on a linear predictor function combining a set of weights with the feature vector. The concept of the perceptron, based on the neuron model, marks one of the earliest attempts to mimic the process of human learning in a machine, laying foundational work for the development of modern machine learning and artificial intelligence.

Overview[edit | edit source]

A perceptron takes several binary inputs, \(x_1, x_2, ..., x_n\), and produces a single binary output. In its simplest form, the perceptron uses a "weighted sum" of its input features, and passes this sum through a step function to produce the output. The weights, \(w_1, w_2, ..., w_n\), are real numbers expressing the importance of the respective inputs to the output. The perceptron updates its weights as it learns from training data, adjusting them using the perceptron learning rule to minimize the difference between the predicted and actual outputs.

Mathematical Model[edit | edit source]

The operation of a perceptron can be described by the formula: \[ f(x) = \begin{cases} 1 & \text{if } w \cdot x + b > 0 \\ 0 & \text{otherwise} \end{cases} \] where: - \(f(x)\) is the output of the perceptron, - \(w\) is the vector of weights, - \(x\) is the vector of inputs, - \(b\) is the bias, a constant that helps the model in a way that it can fit best for the given data.

Learning Rule[edit | edit source]

The perceptron learning rule is a simple algorithm used to update the weights. After presenting an input vector, if the output does not match the expected result, the weights are adjusted according to the formula: \[ w_{new} = w_{old} + \eta (y - \hat{y})x \] where: - \(w_{new}\) and \(w_{old}\) are the new and old values of the weights, respectively, - \(\eta\) is the learning rate, a small positive constant, - \(y\) is the correct output, and - \(\hat{y}\) is the predicted output.

Limitations[edit | edit source]

The original perceptron was quite limited in its capabilities. It is only capable of learning linearly separable patterns. In 1969, Marvin Minsky and Seymour Papert published a book titled "Perceptrons" which demonstrated the limitations of perceptrons, notably their inability to solve problems like the XOR problem. This criticism led to a significant reduction in interest and funding for neural network research. However, the invention of multi-layer perceptrons, or deep learning, overcame many of these limitations.

Applications[edit | edit source]

Despite its simplicity, the perceptron can be used for various binary classification tasks, such as spam detection, image classification, and sentiment analysis. When multiple perceptrons are combined, forming a multi-layer perceptron (MLP), they can solve complex problems that are not linearly separable.

See Also[edit | edit source]

| This article is a stub. You can help WikiMD by registering to expand it. |

Search WikiMD

Ad.Tired of being Overweight? Try W8MD's physician weight loss program.

Semaglutide (Ozempic / Wegovy and Tirzepatide (Mounjaro / Zepbound) available.

Advertise on WikiMD

|

WikiMD's Wellness Encyclopedia |

| Let Food Be Thy Medicine Medicine Thy Food - Hippocrates |

Translate this page: - East Asian

中文,

日本,

한국어,

South Asian

हिन्दी,

தமிழ்,

తెలుగు,

Urdu,

ಕನ್ನಡ,

Southeast Asian

Indonesian,

Vietnamese,

Thai,

မြန်မာဘာသာ,

বাংলা

European

español,

Deutsch,

français,

Greek,

português do Brasil,

polski,

română,

русский,

Nederlands,

norsk,

svenska,

suomi,

Italian

Middle Eastern & African

عربى,

Turkish,

Persian,

Hebrew,

Afrikaans,

isiZulu,

Kiswahili,

Other

Bulgarian,

Hungarian,

Czech,

Swedish,

മലയാളം,

मराठी,

ਪੰਜਾਬੀ,

ગુજરાતી,

Portuguese,

Ukrainian

Medical Disclaimer: WikiMD is not a substitute for professional medical advice. The information on WikiMD is provided as an information resource only, may be incorrect, outdated or misleading, and is not to be used or relied on for any diagnostic or treatment purposes. Please consult your health care provider before making any healthcare decisions or for guidance about a specific medical condition. WikiMD expressly disclaims responsibility, and shall have no liability, for any damages, loss, injury, or liability whatsoever suffered as a result of your reliance on the information contained in this site. By visiting this site you agree to the foregoing terms and conditions, which may from time to time be changed or supplemented by WikiMD. If you do not agree to the foregoing terms and conditions, you should not enter or use this site. See full disclaimer.

Credits:Most images are courtesy of Wikimedia commons, and templates, categories Wikipedia, licensed under CC BY SA or similar.

Contributors: Prab R. Tumpati, MD